Duck (Self-Expression Performance Assistive System)

Research and Design, 3D World Building, Performing - Jack Hao

Developed by UE4, Motive, Ableton, Maya

A Collaborative Project with Quristoff Jiang and Jacqueline Yeon

April 2022 - May 2022

Project Introduction

The project seeks to create a system to enhance the interaction between performers’ bodies and the stage to provide an interactive environment for performers to better express themselves.

Problem Statement

How to build serial communications among body movements, audio, and visual effects.

Why

When it comes to live performances, we always think of spectacular stage scenes and harmonious combinations of body movements and background music. When we are astonished by how well-designed these performances are, it’s not hard to notice that almost everything in a performance is pre-arranged. Visuals, audio, and body movements are not vividly interacting with each other, but fixed and rigid. Though the outcomes can be tremendous, performers barely have the freedom to adjust their performance while performing. This project seeks to create an environment for performers to better self-express by having control over the performance in real-time.

Design Process

1. Observation & Analyze

This video captures a live performance by the band Oh Wonder. As the footage begins, the synergy between the performers' movements, the lighting, and the rhythm is immediately noticeable. The entire show was thoughtfully crafted, leaving a strong impression on me when I first watched it. However, as an avid fan who has attended several of their concerts, I've noticed a pattern of repetition in their performances, particularly in their body movements, which has started to feel somewhat monotonous. Imagine if they could interact with the lighting and certain elements of the music through their movements. This could make each performance unique. In such a scenario, they wouldn't just be dancing to the music under the lights, but rather, they would be integrating their dance with the music and the stage itself.

Freestyle dancing is a pivotal aspect of hip-hop culture. Observers are often astonished by hip-hop dancers' precise body control and the unique interpretations they bring to a single piece of music during freestyle. It's a powerful means for dancers to showcase their individual style and personality. However, this self-expression is limited by the predetermined background music. If dancers could influence the music through their intricate body movements, it would elevate the performance, making it even more captivating and tailored to the dancer's personal expression.

2. Ideation

We made a brainstorm to talk about what functions we wanted our system to have. We wanted to focus on self-expression performances. Our system can support such performances by having performers’ body movements(both detailed movements and the movements of the whole body in space), sounds(music, but not vocal), and visuals(visual effects made in the virtual 3D world) interacting with each other in real time.

3. Prototype & Iteration

In the first prototype, we first tried to capture the position of an object in space and used the captured information to control the volume of a sound.

Then we tried with the human body. The position of the performer’s right hand is captured and used to control an LFO(low-frequency oscillator), which was attached to a track in the DAW(digital audio workstation).

Our initial thought was to create interactions between human body movements and make some visual effects in Unreal based on the body movements. Then we realized that there is no direct interaction between audio and visuals, so it would be our next step to make audio visualization.

As mentioned above, we tried to make an audio visualization based on a piece of music. The volume of the music was analyzed and used to set the sizes of a sphere and an array of cubes.

At this point, what we got was a system providing direct interactions between performers’ body movements, audio, and visuals. Then we decided to try this in a real performance.

As mentioned above, we utilized the design in a real performance. The performance was about the performer’s self-reflection as an individual in both virtual and real worlds.

In this performance, the performer intended to demonstrate the roles he is playing in both real and virtual worlds.

Performer’s Statement:

People these days happen to have more than one avatar in all kinds of virtual worlds and online communities. Then along comes the question that what are the connections between these avatars and real people? Are they extensions of people's minds? Or are they just tools for human beings to express themselves? There are so many trend leaders loved and followed by millions of fans on the internet. They show up sometimes in pictures and videos, sometimes as avatars. Sometimes only their voices can be heard. It seems that there is no chance to fully know about not only the so-called “internet leaders”, but any other persons on the internet. Everything on the internet seems to be well designed and customized for people’s taste with a lack of sincerity.

Ducks in the performance serve as the ironic representations of netizens. Ducks swimming, alive and kicking in the Ocean just like netizens barbarically, unbridled surfing on the internet. The huge duck in the back of the stage followed by all the small ducks represents the trend leaders on the internet.

The performer wants to be an outsider in the combination of the real and virtual world, to be apart from the chaos. Thus, the performer’s avatar is an abstract shining human-like creature, but not a duck. Unfortunately, no one can escape from the carnival of indulgence and freedom that the internet has brought to the world. The performer is in the world, the avatar is also dancing with the ducks on the same stage.

4. Performance & Feedback

We had the performance(Demo) in a black box with set up for motion capture and, luckily, we got a few audience. The feedback from our audience was mostly positive. Many of them were impressed by how all the interactions worked coherently within the whole performance. However, interactions between the audience and the performance were expected. I, as the performer, believed that the performance succeeded in helping the performer self-express. It was good to feel the strong connections between me, the performer, with the whole stage.

Technical Showcase

Audio Visualization

I used the audio analyzer to get the volume of the music and used the data to set the sizes of an array of cubes.

Abstract Avatar

I used the skeleton of a human-like avatar and attached the Niagara system to it.

Particle System

The flying small pixelated ducks are created by the Niagara system. Then I used the volume of the music to control the velocity of them.

Motive to UE4

The motion capture part is mostly driven by Motive. The way to use the motion capture data in Unreal is to add a plug-in called OptiTrack and use the Animation Blueprint above to attach it to the skeleton of the character in Unreal.

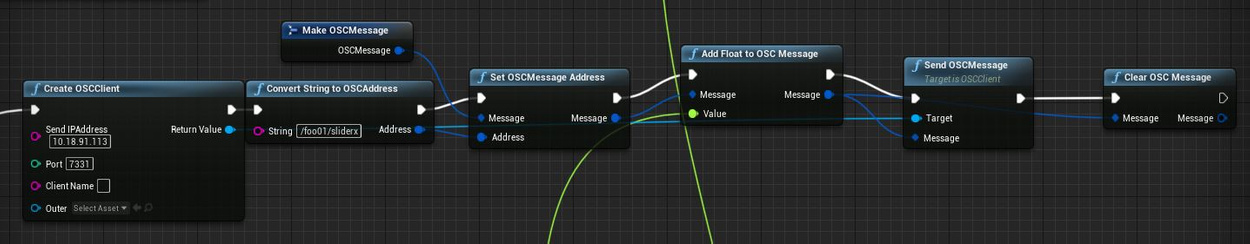

Communication between UE4 and Ableton

I used the OSC system to send messages between Unreal and Ableton. The position of the performer and the distance of the performer's two hands are all sent to Ableton to control the music in this way.

Camera Movements (Motion Capture)

Most of the cameras I placed in the Unreal world can be controlled by using keys and a mouse. However, to better simulate the shakes and movements of the camera when it's held by hand, as you can see in the video above, we captured the motion of a real screen to control a virtual camera in the Unreal world. A person can hold the screen and use it as a camera while performing.

Takeaways

The visual part of the demo performance was all made with UE4. I learned a wide variety of functions and plug-ins supported by Unreal Engine from online tutorials and my instructor. Also, I learned about the basic pipeline of motion capture from setting up the required devices to calibration and data cleaning. Since this was a collaborative project made by a group of three, in which I took care of all the technical issues and part of the design process while the other two persons were musical professionals, building up consensus and conversations between group members and serial communication between software were of vital importance. After finishing this project, my skills to work with others as a team are significantly improved.